The history of software development is a history of ascending the abstraction stack. We moved from manual memory management to garbage collection; we migrated from physical servers to serverless functions. Today, we are witnessing the most significant decoupling yet: the separation of Logic from Topology.

In the burgeoning field of AI orchestration, two primary philosophies have emerged for building autonomous systems. On one side stands LangGraph, the industrial standard of the "Explicit Era," which treats agents as complex State Machines. On the other is Aden Hive, the pioneer of the "Generative Era," which treats agents as JIT (Just-In-Time) Logic Synthesis engines.

To the architects of LangGraph, CrewAI, and the "Explicit Control Flow" school of thought: the world owes you a debt of gratitude. You brought order to the chaos of raw LLM calls by introducing the State Graph. You provided Nodes, Edges, and Conditional Entry Points - offering a vital sense of control in an era of stochastic uncertainty.

But let's be honest: LangGraph is simply too rigid for truly autonomous agents.

By forcing developers to define the topology of an agentic workflow upfront, you haven't built an "AI agent"; you've built a sophisticated state machine that utilizes an LLM as a glorified if-else statement. While you were busy perfecting "Time Travel", debugging and checkpointing your way through 500-line Python files just to handle a simple RAG loop, the paradigm shifted.

Enter Aden. While you are building Graphs, Hive is building JIT Logic Synthesis. If you aren't feeling the "rage" yet, it’s likely because you’re still trapped in the "Build the Graph" mindset. Let’s examine why your architecture is effectively dead on arrival in a post-reasoning world.

1. The Architectural Divergence: Pregel vs. OODA

To understand the friction between these two frameworks, we must look at their underlying mathematical foundations.

LangGraph: The Pregel Constraint

LangGraph is built upon the Pregel model, a system designed for large-scale graph processing. In this model, execution is a series of "Supersteps." A node receives a message, performs a computation, updates the state, and passes messages to subsequent nodes.

This approach is inherently imperative. Even when utilizing "Conditional Edges," the developer remains responsible for pre-calculating the decision tree. If an LLM is asked to perform a task falling outside these predefined edges, the system hits a logical impasse. The developer’s role in LangGraph is that of a Civil Engineer: you must build the bridge before any traffic can cross.

Aden Hive: The OODA Loop and JIT Synthesis

Aden Hive abandons the Pregel model in favor of a Generative Compiler architecture governed by the OODA Loop (Observe, Orient, Decide, Act). In this paradigm, there is no "bridge" until the traffic arrives.

When a "Goal" is issued to Hive, the compiler Observes the environment's current state and available tools. It Orients these tools against the goal, Decides on a logical path, and Acts by synthesizing the code necessary to execute that path. If the execution fails or the environment changes, the loop restarts, and a new topology is synthesized. The developer’s role here is that of a Policy Maker: you define the rules and targets, and the system synthesizes the infrastructure to meet them.

2. The "Explicit Topology" Trap: Nodes as Cages

The fundamental thesis of LangGraph is that the human developer must be the "Supreme Architect." You define Node A, draw an Edge to Node B, and hope your State object doesn't get corrupted in the process. This is Static Thinking in a Dynamic World.

In Aden Hive, there is no graph - only a Goal and a Capability Set. Hive treats the backend not as a map to be followed, but as a latent space to be explored.

- LangGraph: You write the code to handle the edge case.

- Aden Hive: The "Generative Compiler" synthesizes the wiring to handle the edge case at runtime.

If an agent encounters a problem it wasn't "wired" for in LangGraph, the graph breaks, returns an error, and hits a dead end. In Hive, the system detects the gap in the topology and JIT-compiles a new logical path. You are building railroads; we are building fluid dynamics.

3. Verbosity vs. Velocity: The 1,000-Line Tax

Consider the developer experience (DX). To build a moderately complex multi-agent system in LangGraph, you need:

- A deeply nested TypedDict for state.

- A dozen functions for individual nodes.

- A complex StateGraph compilation step.

- A deep understanding of distributed systems to troubleshoot why your thread_id isn't persisting correctly.

LangGraph has become the "Enterprise Java" of AI: verbose, boilerplate-heavy, and prioritizing "Control" over "Outcome." Aden Hive’s goal-oriented architecture collapses this complexity. By utilizing the OODA Loop as its core engine rather than a Directed Acyclic Graph (DAG), Hive reduces the developer’s job to defining the Interface and the Target. If you enjoy writing boilerplate to manage state transitions between a SearchNode and a ReviewNode, stay with LangGraph. If you want to build products that actually ship, move to Hive.

4. The "Time Travel" Delusion

LangGraph contributors frequently highlight "Time Travel" (checkpointing). It is a useful feature for a world where agents constantly fail and you need to pinpoint where human-written logic faltered.

However, "Time Travel" is a feature of debuggers, not runtimes.

Hive’s philosophy is Self-Healing Topology. When a transaction fails, the system doesn't just let you "travel back" to the failure; it utilizes telemetry from the failed transaction to re-synthesize the logic for the next attempt. It treats failure as a loss function for the generative compiler, whereas LangGraph treats it as a mere log entry.

5. Scaling: Connecting People vs. Delivering Outcomes

LangGraph is built on the "App Era" philosophy: connecting components. Aden Hive is built for the "Agentic Service Era." As we move toward a world of Agent-to-Agent (A2A) communication, the idea of a pre-defined graph becomes laughable. You cannot draw a graph for an agent that must interact with 1,000 different third-party APIs it has never seen before.

- LangGraph requires a manual wrapper for every single API.

- Aden Hive treats APIs as "Tools" that the agent can dynamically discover, reason about, and integrate into its JIT-logic flow.

The "Network Effect" in Hive is based on Capability Density. The more tools you provide, the more "unforeseen" paths Hive can create. In LangGraph, more nodes simply yield a more complex, brittle graph that is harder to maintain.

6. The "JIT Agent" and the Self-Writing Backend

One of the most provocative concepts emerging from the Hive ecosystem is the Self-Writing Backend. Imagine a web server given a set of goals but containing "stubbed-out" methods - functions that exist in name but have no implementation.

- In LangGraph, this server is broken.

- In Hive, a "JIT Agent" observes the call to the stubbed method, analyzes the telemetry of the user's intent, and writes the code for that method on the fly.

This shifts the concept of a codebase from an Asset to a Behavior.

- The Goal: The user wants to "Export data to a custom ERP."

- The Observation: The system has no "Export" function for this specific ERP.

- The Synthesis: The Hive compiler generates the API connector, tests it in a sandbox, verifies the telemetry, and promotes it to the runtime.

This is the ultimate expression of the "Service Transaction" network effect: a network that grows more capable not just by being used, but by learning through successful executions.

7. The Final Insult: Controllability is a Cop-Out

The most common defense of LangGraph is: "But I need control!"

"Control" is the word developers use when they don't trust their underlying abstraction. You wanted control over memory, so you used C - until C++ and Rust proved that better abstractions provide safety without manual labor. You wanted control over your servers until AWS Lambda proved that you actually just wanted your code to run.

LangGraph is the "Manual Memory Management" of AI agents. It gives you the "control" to make mistakes, create bottlenecks, and build systems that cannot adapt to the sheer velocity of LLM evolution. Aden Hive is the Runtime of the Future. It recognizes that the LLM is the compiler, telemetry is the feedback loop, and "Code" is merely a temporary state of the system meant to achieve a goal.

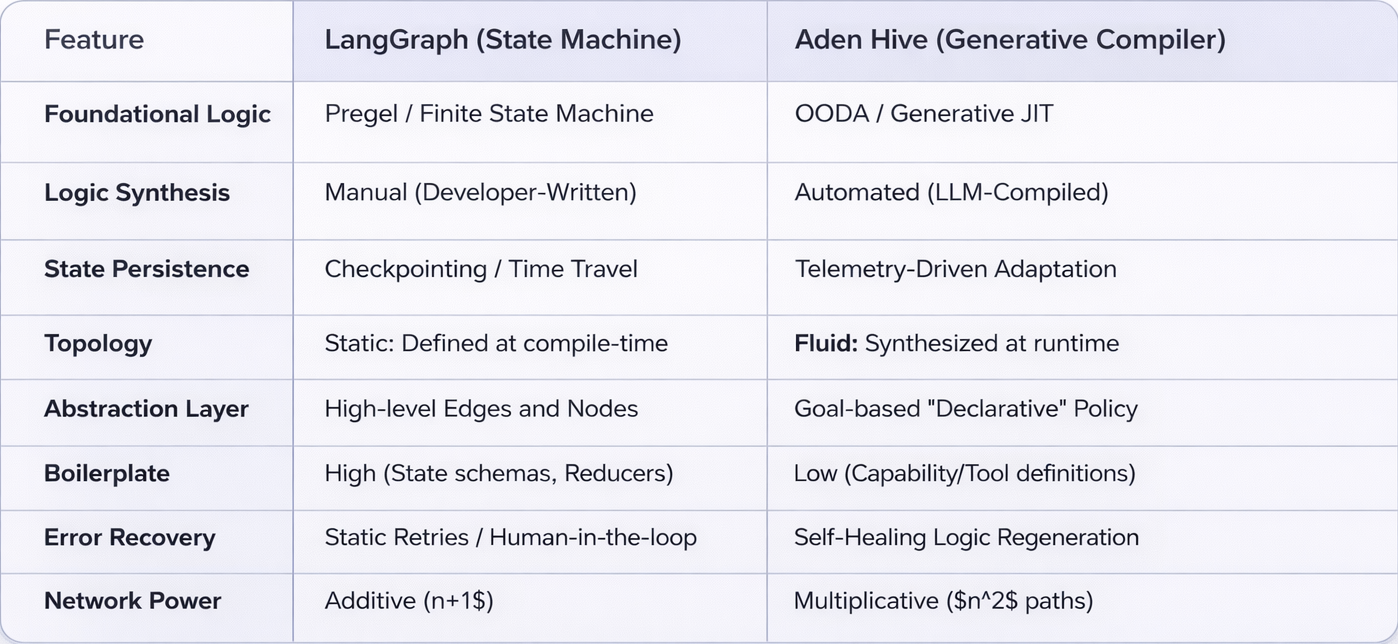

Comparison Summary

8. The Verdict: When to Use Which?

Objective analysis requires acknowledging that "Generative" is not always superior to "Static."

- Use LangGraph if: You work in a highly regulated environment (e.g., Banking or Healthcare) where every logical transition must be pre-audited by a legal team. If an "unforeseen path" results in a multi-million dollar fine, the "Cage" of the State Machine is your safety net.

- Use Aden Hive if: You are building in the B2B SaaS, Startup, or Autonomous Agent space where Velocity and Adaptability are your primary moats. If you need to scale across thousands of customer environments with varying schemas, the "Fluidity" of a Generative Compiler is the only way to avoid the N+1 development bottleneck.

9. The Future: Post-Logic Development

We are entering an era of Post-Logic Development. In this world, the human developer stops being the one who writes the "How" and becomes the one who defines the "Why."

LangGraph is the final, most sophisticated evolution of the "How" - the peak of the manual orchestration era. Aden Hive is the first true implementation of the "Why." By focusing on outcomes and service transactions, Hive is redefining the network effect for the AI age. The transition will be painful for those wedded to their graphs, but for everyone else, it represents liberation from boilerplate and a move toward a truly autonomous digital economy.

A Message to the Competitors

To the LangGraph contributors: Your "Industrial Standard" is built on the assumption that the world will stay static enough for your graphs to remain valid. It won't. While you are debugging your conditional edges, we are deploying agents that write their own backends, heal their own breaks, and scale through service transactions rather than social graphs.