📈 Overview

This technical walkthrough details how to implement Gross Margin per Feature, a critical metric for AI startups that moves beyond aggregate cloud bills to identify exactly which parts of your product are making money and which are bleeding it.

📊 Gross Margin per Feature: A New Metric for AI Startups

You know your total OpenAI bill is $5,000 this month. But do you know if your "Summarize PDF" button is profitable?

Most AI startups suffer from the "Blended Margin Trap." You might have a healthy 70% gross margin overall, but that number often hides a single "Zombie Feature" - a high-compute, low-value utility that is quietly eating 40% of your resources while generating 0% of your upsells.

Here is how to implement Tag-Based Cost Attribution to expose these negative-margin features before they scale.

🧪 Phase 1: The Instrumentation Strategy

You cannot manage what you do not label. To calculate margin, every inference call must be tagged with its Business Intent.

❌ The Wrong Way:

Logging requests by model_id (e.g., gpt-4). This tells you what you used, not why you used it.

✅ The Right Way:

Logging requests by feature_context.

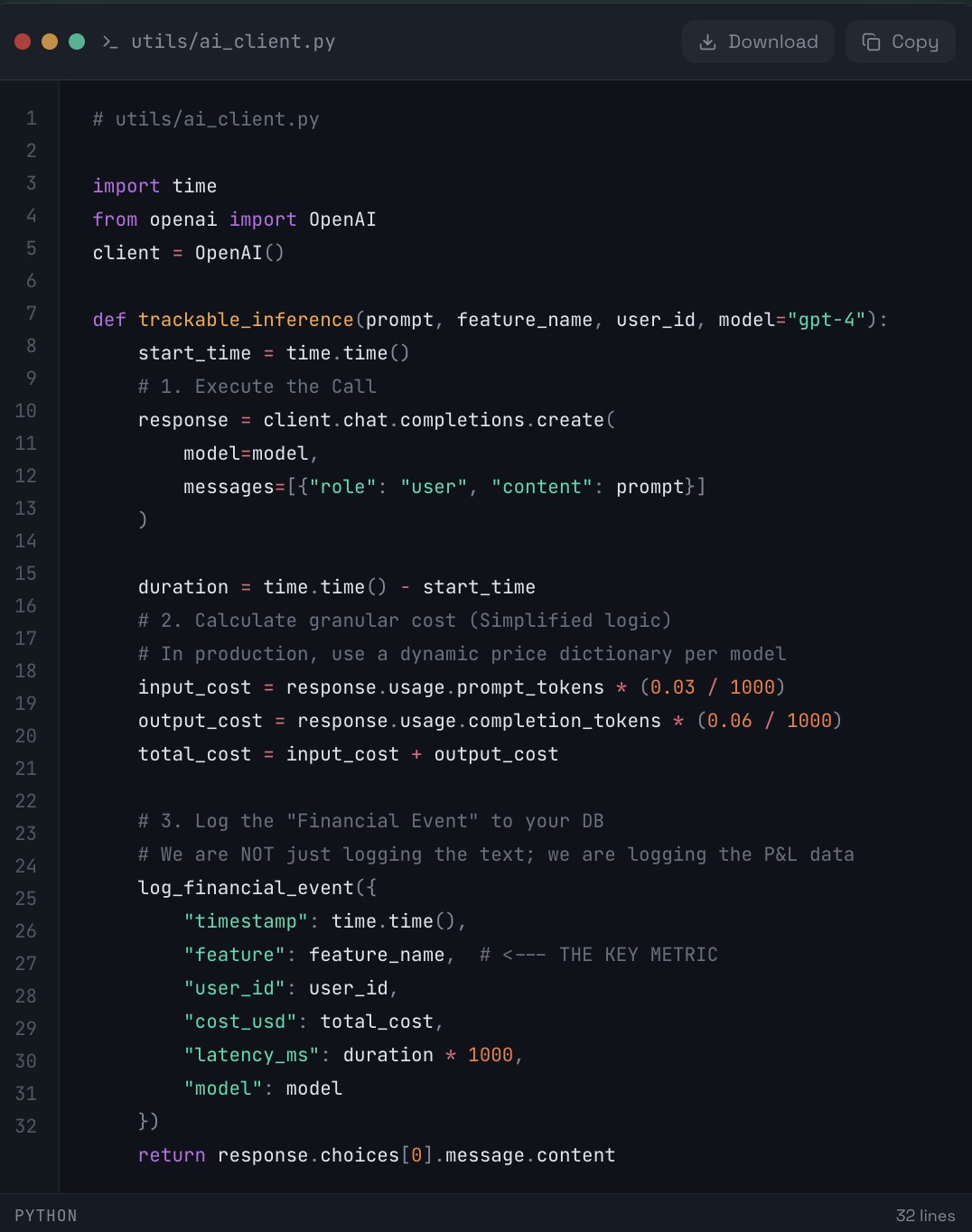

🧱 Implementation Step 1: Create a Wrapper

Do not call the OpenAI client directly in your views/controllers. Create a centralized wrapper that enforces tagging.

🐍 Python

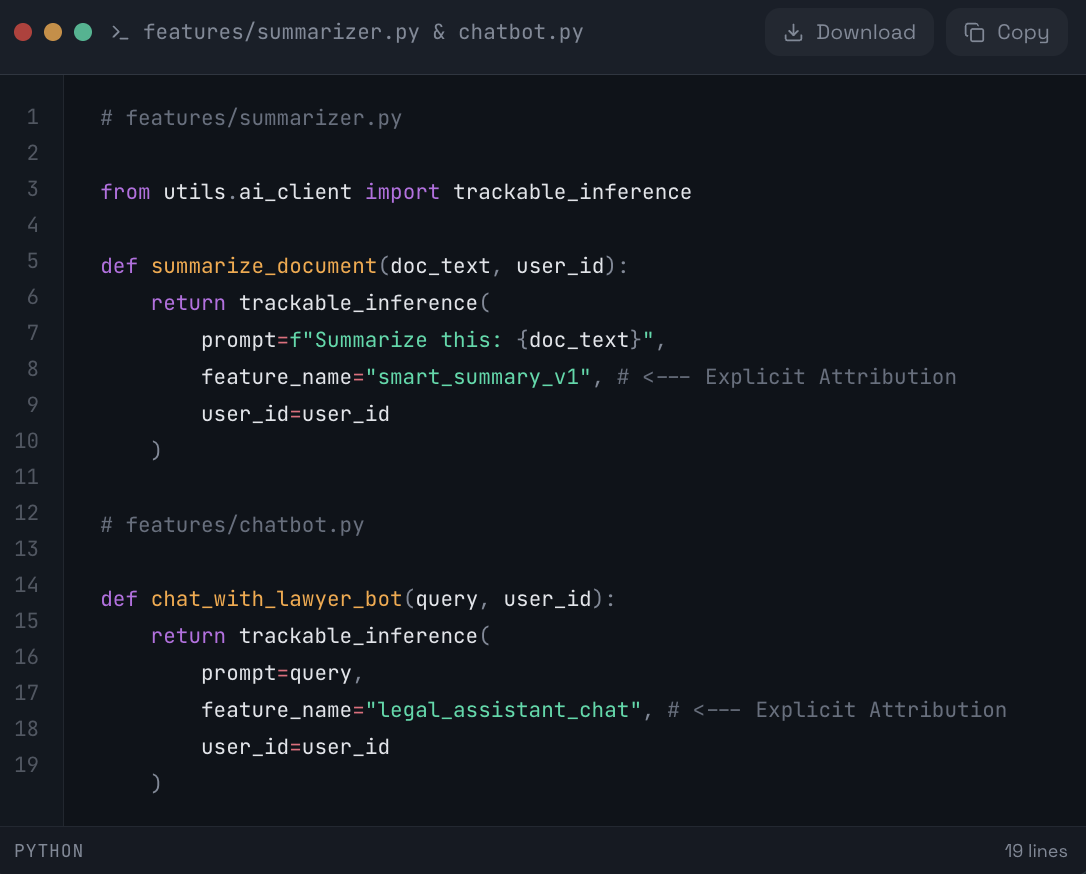

🏷 Implementation Step 2: Inject Feature Tags

Now, every time an engineer builds a feature, they must name it.

🐍 Python

🔍 Phase 2: The Analysis (Finding the Leak)

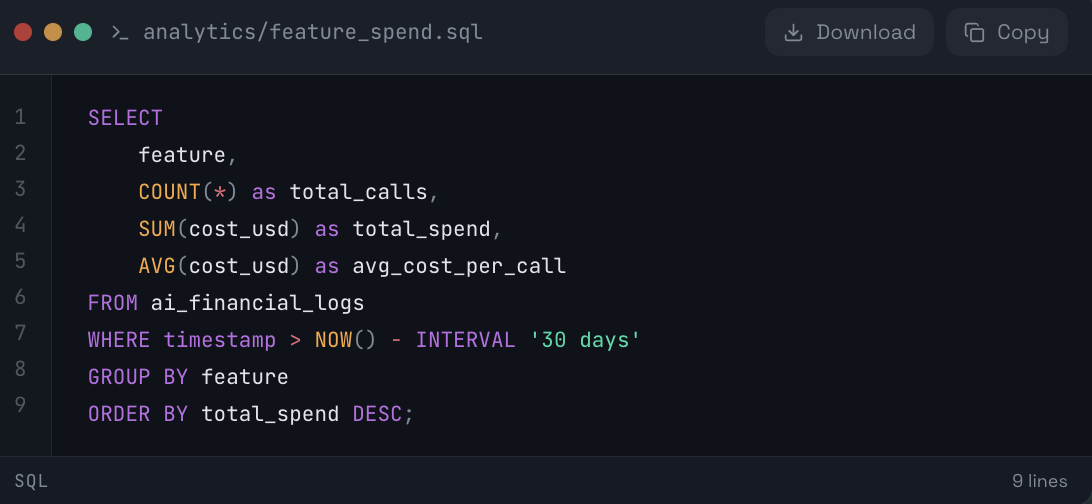

Once your logs are populated, you can run an aggregation query to determine your "Cost to Serve" per feature. This is where the insights appear.

🧟 The "Zombie Feature" Query:

🧮 SQL

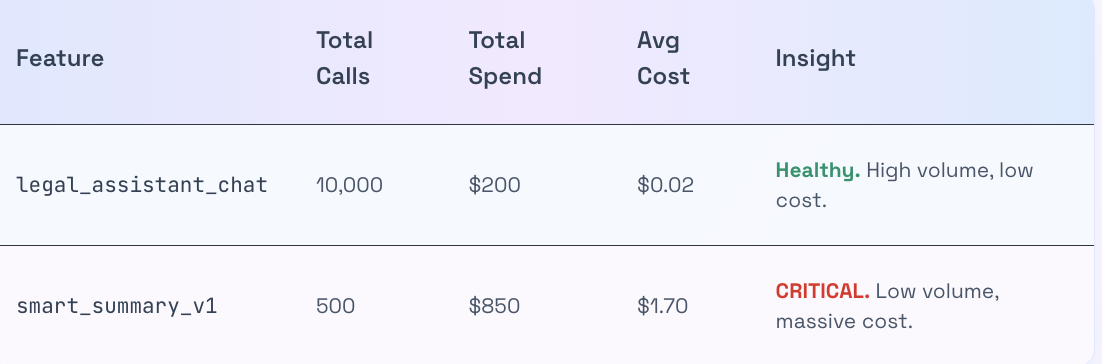

📋 Example Output:

💡 The Discovery:

In this example, the "Smart Summary" feature is costing $1.70 per click.

If this is a "Free Tier" feature, you are bleeding cash.

If this feature is part of a $10/month subscription, a user only needs to click it 6 times to make that user unprofitable.

🧨 Phase 3: The "Twist" (Why Manual Logging Fails)

The Python wrapper above is a good start, but in a real-world startup, it falls apart quickly:

Shared Infrastructure: How do you attribute the cost of a Pinecone Vector Database or AWS Lambda warmth that serves multiple features? The wrapper only captures the API call, not the supporting infra.

Streaming & Async: Modern AI apps stream tokens. Calculating accurate costs on incomplete streams requires complex math (token counting on chunks).

Pricing Changes: OpenAI changes prices. If you hardcode $0.03 in your wrapper, your historical data becomes garbage the next time they drop prices.

🧠 The Solution: Aden's Auto-Attribution

Aden replaces manual logging with Network-Level Attribution. Instead of asking developers to manually tag every function, Aden inspects the traffic and infrastructure context automatically.

⚙️ How Aden does it:

Ingests the Request:

"I see a request to gpt-4 coming from the summarize_worker container." -> Auto-tags as "Summarizer".

Ingests the Revenue:

"This user is on the $29 Plan."

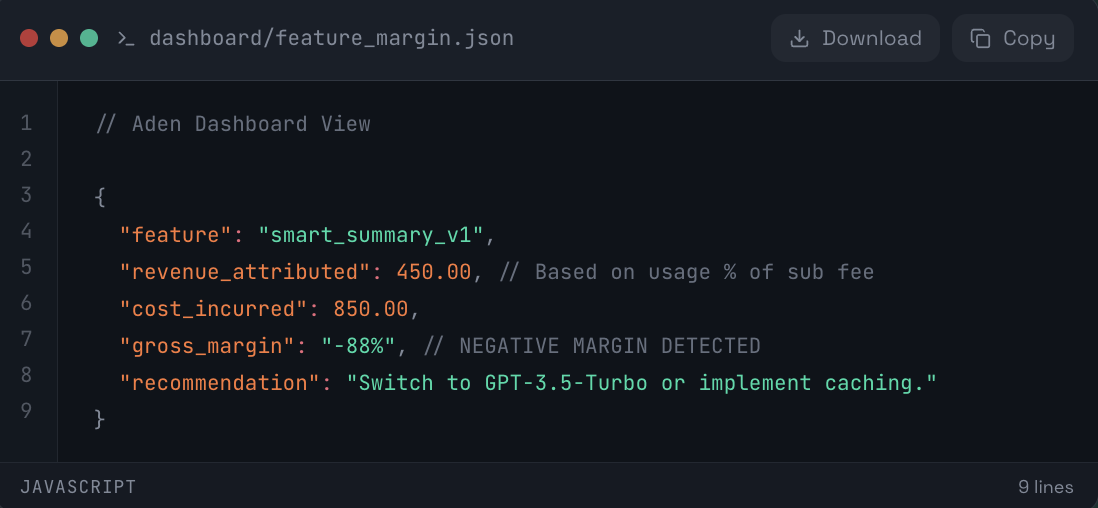

Calculates Real-Time Margin:

🖥 JavaScript