The Agentic Singularity: A Comparative Architectural Analysis of State-Based vs. Generative Frameworks

The era of "Hello World" agents is over. We have moved beyond simple Chain-of-Thought prompting into the realm of Cognitive Architectures, systems that require robust state management, cyclic graph theory, and deterministic control flow.

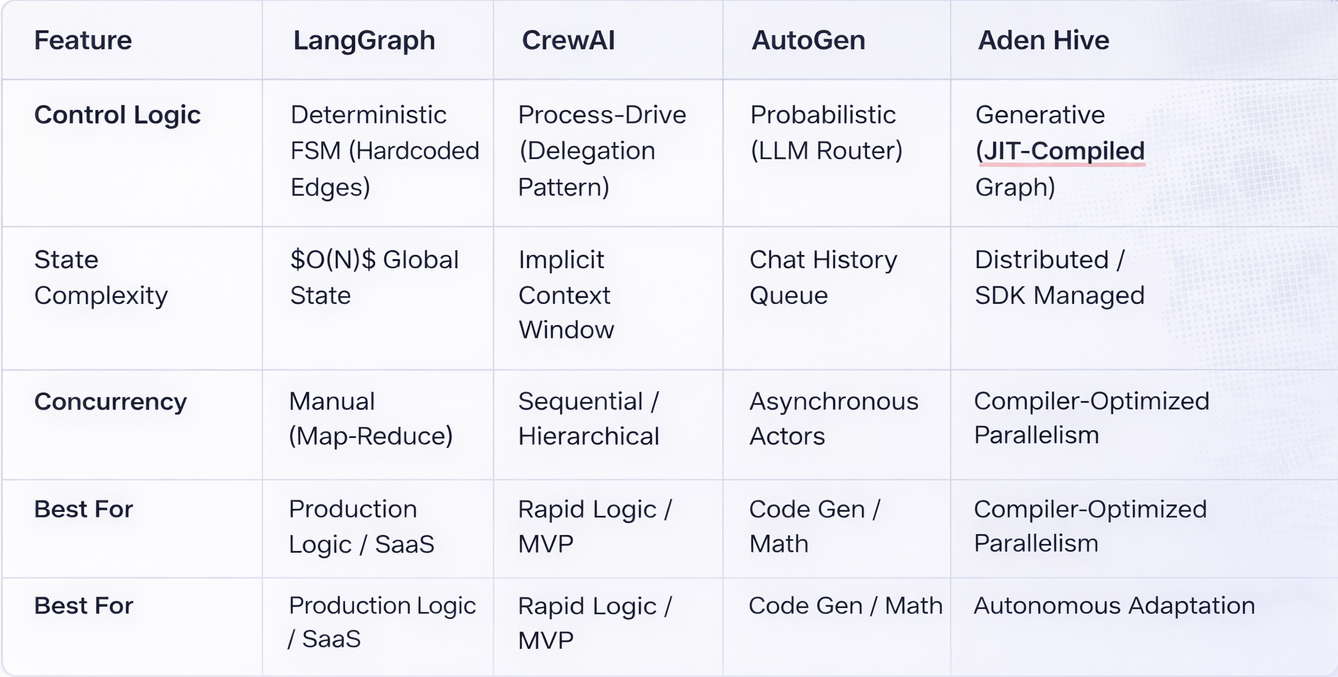

This analysis deconstructs the five dominant architectures, LangGraph, CrewAI, AutoGen, LlamaIndex, and Aden Hive, evaluating them not on marketing claims, but on their underlying algorithmic implementations, state transition logic, and distributed consistency models.

1. LangGraph (The Finite State Machine)

Architectural Paradigm: Graph-Based State Machines (BSM)

Mathematical Model:

LangGraph is not merely a "graph" library; it is an implementation of Pregel, Google’s model for large‑scale graph processing. It treats agents as nodes in a state machine where the edges represent conditional logic.

The Internals

Unlike a DAG (Directed Acyclic Graph), LangGraph explicitly enables cyclic execution. The architecture relies on a shared, immutable Global State Schema.

- State Definition: You define a TypedDict or Pydantic model

- Node Execution: Each node is a function . It receives the current state and returns a state update (diff), not a new state.

State Reducer: The system applies the update . This ensures idempotency and allows parallel branch execution (map-reduce patterns).

Algorithmic Control Flow

LangGraph introduces Conditional Edges, effectively functioning as a router node :

Technical Pros / Cons

Checkpointing (Time Travel): LangGraph serializes the state S_{t} to a persistent store (Postgres/SQLite) after every superstep. This allows you to

get_state(thread_id, checkpoint_id

and fork the execution history-crucial for "Human-in-the-loop" debugging.

Verdict

The Industrial Standard. Best for deterministic finite automata (DFA) logic where state transitions must be explicitly verifiable.

2. CrewAI (The Hierarchical Process Manager)

Architectural Paradigm: Role‑Based Orchestration Layer.

Mathematical Model: Hierarchical Task Network (HTN).

CrewAI abstracts the low‑level graph into a Process Manager. It wraps underlying LangChain primitives but enforces a strict Delegation Protocol.

The Internals

Sequential Process: A simple linked list where

Hierarchical Process: Utilizes a specialized Manager Agent (typically GPT‑4) running a simplified Map‑Reduce planner.

The Manager Algorithm

Decomposition: Given a high‑level goal $G$, decompose into subtasks .

Assignment:

Optimize assignment based on the agent's role and tool_description embedding similarity.

Review Loop:

The Manager evaluates the output . If (threshold), it triggers a recursive delegation_call back to the worker with feedback.

Technical Pros / Cons

Context Window Optimization: CrewAI implicitly handles the token window management, passing only relevant "Task Output" slices rather than the entire conversation history, preventing context overflow in long chains.

Verdict: High‑Level Abstraction. Excellent for rapid scaffolding of cooperative multi‑agent systems, though it hides the underlying state transitions (Black Box State).

3. Microsoft AutoGen (The Conversational Topology)

Architectural Paradigm:

Multi‑Agent Conversation (Actor Model).

Mathematical Model:

Markov Decision Process (MDP) over Dialogue.

AutoGen treats control flow as a byproduct of conversation. It implements an Actor Model where agents are independent entities that communicate exclusively via message passing.

The Internals

The core innovation is the GroupChatManager which implements a dynamic Speaker Selection Policy. Unlike a static graph, the next step is determined probabilistically or deterministically at runtime.

Code Execution Sandbox

AutoGen integrates a UserProxy that acts as a Local Execution Environment (using Docker). Assistant emits a code block (e.g., Python). UserProxy extracts the block → Executes in Container → Captures stdout/stderr. Feedback Loop: If exit_code != 0, the stderr is injected back into the context as a new message, prompting the Assistant to debug.

Technical Pros / Cons

Dynamic Topology: The interaction graph is not pre‑defined. It emerges during runtime based on the conversation trajectory.

Verdict: Turing‑Complete Execution. The superior choice for Code‑Generation tasks requiring iterative interpretation and strictly isolated execution environments.

4. LlamaIndex Workflows (The Event-Driven Bus)

Architectural Paradigm: Event‑Driven Architecture (EDA) / Pub‑Sub.

Mathematical Model: Asynchronous Event Loops.

LlamaIndex pivoted from standard DAGs to Workflows, which decouple the "steps" from the "execution order."

The Internals

Step A: Process Input ightarrow$ emit(EventX)

Step B: @step(EventX) Process emit(EventY)

This allows for complex Fan‑Out patterns without explicit edge definitions. Multiple steps can listen to EventX and execute in parallel threads (using Python's asyncio loop).

Retrieval-Centric Design

LlamaIndex injects its Data Connectors deeply into the agent loop. It optimizes the "Context Retrieval" step using hierarchical indices or graph stores (like Property Graphs), ensuring the agent's working memory is populated with high‑precision RAG results before reasoning begins.

Verdict

Data‑First Architecture. Best for high‑throughput RAG applications where the control flow is dictated by data availability (e.g., document parsing pipelines) rather than logical reasoning loops.

5. Aden Hive (The Generative Compiler)

Architectural Paradigm: Intent-to-Graph Compilation (JIT Architecture).

Mathematical Model:

Aden Hive represents Generation 3 of agentic frameworks. It posits that static graphs are brittle. Instead of manually wiring nodes (LangGraph), Hive uses a meta-agent to compile the execution graph at runtime.

The Internals: Generative Wiring

Hive operates on a Goal‑Oriented architecture:

- Intent Parsing: The user defines a goal .

- Structural Compilation: The "Architect Agent" generates a DAG specification (JSON/YAML) optimized for that specific goal, selecting nodes from a registry of capabilities.

- Runtime Execution: The system instantiates this ephemeral graph and executes it.

Self-Healing via OODA Loop

Hive implements a structural Observe‑Orient‑Decide‑Act (OODA) loop at the infrastructure level:

- Observe: Monitor Step failure rate.

- Orient: If it persists, the Architect pauses execution.

- Decide: It rewrites the node's prompt or logic - or effectively rewires the graph to bypass the failing node.

- Act: Resume execution with the new graph topology .

Parallelization Primitives

Hive treats concurrency as a first-class citizen using a Scatter-Gather pattern injected automatically by the compiler.

- If a goal implies multiple independent searches, the compiler automatically generates a Fan-Out node structure without the developer explicitly coding asyncio.gather.

- Verdict: The Adaptive Future. It solves the "Fragility Problem" of manual orchestration. While LangGraph requires you to predict every edge case, Hive structurally evolves to handle them.

Final Technical Verdict: Complexity Trade-off

Recommendations for Architects

- Use LangGraph if you are building a Stateful Application (e.g., a customer support bot with a specific escalation policy). You need the deterministic guarantees of a Finite State Machine.

- Use AutoGen if you are building a DevTool. The Docker‑based execution sandbox is non‑negotiable for safe code generation.

- Use Aden Hive if you are building AGI‑adjacent systems. If your problem space is too complex to map out manually (e.g., "Research a cure for cancer"), you need an architecture that allows the AI to design its own workflow. Hive moves the "intelligence" from the node level to the topology level.